AI/ML Model Operations

Why LLM Inference Deployment is Still a Guessing Game

Training a model feels like progress. Deploying it often feels like panic.

Ask any ML engineer what happens right after they finish training an LLM. The excitement is real — the model works, the metrics look good, the outputs look promising.

And then the questions begin.

“What GPU do we need?”

“What batch size should we use?”

“Will this fit in memory?”

“Should we use vLLM, TensorRT-LLM, or TGI?”

“What will latency look like at 20 RPS?”

“How much will this cost?”

That’s when reality hits: training is the easy part — inference deployment is the real challenge. And today, it’s still a guessing game.

The Real Workflow: Trial-and-Error Engineering

If you’ve deployed real ML models, this sequence will feel painfully familiar:

Pick a GPU based on intuition

Set batch size = 1

Raise batch size = 2 → OOM

Cut sequence length

Switch to a larger GPU

Try vLLM

Try TensorRT-LLM

Benchmark latency

Miss the SLO anyway

Increase replicas

Rerun load tests

Watch costs spike

And then…

Repeat. And repeat again.

This isn’t engineering. This is survival —

an Iteration Hell Loop that almost every ML engineer is trapped inside. Some teams use scripts or automation to speed up parts of this loop, but those tools only execute steps — they don’t help with the actual decision-making that causes the loop in the first place. Every new fine-tuned checkpoint is treated as a new model version, so the model developer must re-evaluates GPU, batch size, and runtime instead of assuming the previous configuration still holds.

Why This Pain Exists: We Expect Engineers To Do the Impossible

Most of the pain in inference deployment comes from a single, uncomfortable truth:

We expect ML engineers to pick optimal deployment parameters without the information required to make those decisions.

Let’s break down the root causes.

1. Parameter Count ≠ Inference Behavior

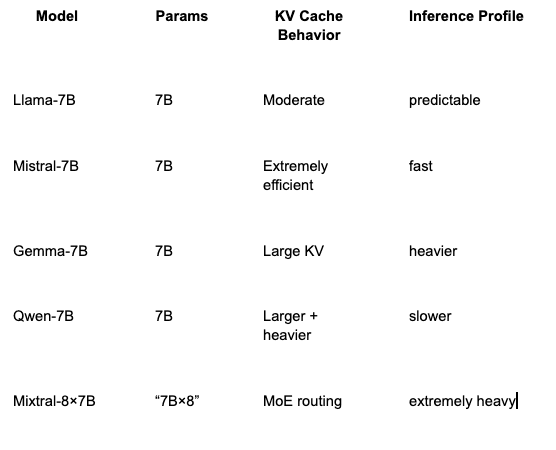

Everyone talks about 7B, 13B, 70B models. But parameter count is a terrible proxy for inference cost. Two “7B” models can behave completely differently:

Parameter count doesn’t tell you:

KV cache size

attention head layout

hidden dimension

MoE behavior

flash-attention compatibility

per-token compute

memory fragmentation

prefill vs decode cost differences

Yet these factors completely determine:

GPU choice

feasible batch size

achievable latency

risk of OOM

per-request cost

And engineers are left to figure this out manually.

2. Pre-processing Impacts Actual Sequence Length

Pre-processing matters for inference when it changes token count.

A simple preprocessing pipeline can produce:

512-token inputs → trivial

2048-token inputs → substantial

8192-token inputs → massive

Each of those multiplies:

KV memory

prefill latency

batch feasibility

GPU requirements

Even the tokenizer matters. Different tokenizers produce wildly different lengths for the same text.

This alone can invalidate entire deployment configurations.

3. GPUs Are Not Interchangeable

Choosing a GPU sounds simple. But it isn’t.

A10 → cheap but VRAM-limited

L40 → fast but struggles with long contexts

A100 → balanced and reliable

H100 → compute monster but expensive

T4 → struggles with modern models

And each model architecture stresses GPUs differently.

Some are memory-bound.

Some are compute-bound.

Some are KV-cache bound.

Some are sensitive to batch size.

Some scale well.

Some don’t.

There is no one-size-fits-all GPU.

4. Runtimes Behave Differently

Serving frameworks can change everything:

vLLM excels with batching

TensorRT-LLM dominates on certain GPUs

TGI offers balance but not peak performance

DeepSpeed is training-optimized

ONNX Runtime is good for small or quantized models

Choosing the wrong runtime can increase latency, reduce throughput, or break entirely due to kernel limitations.

This is too much complexity to expect engineers to reason about manually.

5. Traffic Patterns and SLOs Make Everything Harder

Even with the right hardware and runtime, traffic changes everything.

If you need:

20 RPS

p95 < 80 ms

context lengths ranging from 512–4096 tokens

Your deployment plan is completely different than:

1 RPS

no strict latency

uniform prompt sizes

Traffic determines:

batching behavior

required replicas

queueing delay

GPU saturation level

cost per request

But engineers often have no reliable traffic forecasts before launch.

The Result: Engineers Are Flying Blind

Because of all these variables, engineers end up:

reading random GitHub issues

trying settings they found on Reddit

guessing batch sizes

switching GPUs

switching runtimes

burning expensive GPU hours

missing SLOs

delaying launches

This is not due to lack of skill. It’s a lack of visibility. The ecosystem simply hasn’t given ML developers the tools they need.

Why Hasn’t the Industry Solved This?

Because most tooling focuses on execution, not decision-making.

MLflow packages models but doesn’t tell you how to configure inference.

KServe, Seldon, Bento, Ray Serve deploy models — after you guess the right parameters.

Triton is a powerhouse, but assumes you already know your configuration.

Cloud providers recommend VM sizes, not model-specific GPU/batch/runtime choices.

GPU calculators estimate VRAM, not latency or cost.

No system understands:

model architecture

KV cache dynamics

tokenizer behavior

traffic patterns

latency SLOs

runtime compatibility

GPU cost/perf profiles

And so engineers are left to navigate deployment through trial-and-error.

There Has to Be a Better Way

Given how much information is available today — from model configs to GPU specs to runtime capabilities — it should be possible to compute:

the right GPU type

the optimal batch size

feasible sequence lengths

expected latency and throughput

how many replicas are required for a given SLO

cost per request or per million tokens

which runtime is best for a given model

whether a configuration will OOM

whether a GPU will be underutilized

This shouldn’t require days of experimentation.

It shouldn’t require reading dozens of forum threads.

It shouldn’t require tribal knowledge.

Inference deployment should not be guesswork.

Engineers deserve better tools — ones that understand their model, their workload, and their constraints, and help them make informed decisions before they deploy.

Conclusion: Let’s Make Inference Simple Again

Training an LLM is complex. Inference deployment shouldn’t be.

The industry needs tools that are:

model-aware

architecture-aware

SLO-aware

cost-aware

runtime-aware

hardware-aware

Tools that guide configurations intelligently — not through trial-and-error.

Because once engineers can deploy models confidently and efficiently, we can finally shift our energy back to where it matters: building great systems, great products, and great experiences powered by AI.

At ParallelIQ, we’re building the intelligence layer that turns model deployment from guesswork into engineering.