AI/ML Model Operations

Variability Is the Real Bottleneck in AI Infrastructure

AI infrastructure conversations usually start with scarcity: GPU shortages, long lead times, and rising costs. But once systems are deployed, a more subtle problem dominates day-to-day reality:

Variability, not scarcity, is what breaks AI systems at scale.

Teams routinely observe something puzzling:

The same GPU SKU behaves differently across clusters

Average throughput looks fine, but users complain

Performance degrades “randomly” under load

These are not bugs. They are emergent properties of complex systems.

What do we mean by “variability”?

Variability is the spread between best-case, typical, and worst-case behavior of a system. In AI infrastructure, it shows up as:

Wide latency distributions

High tail latency (p95, p99)

Inconsistent throughput across environments

“Noisy” performance that defies simple explanation

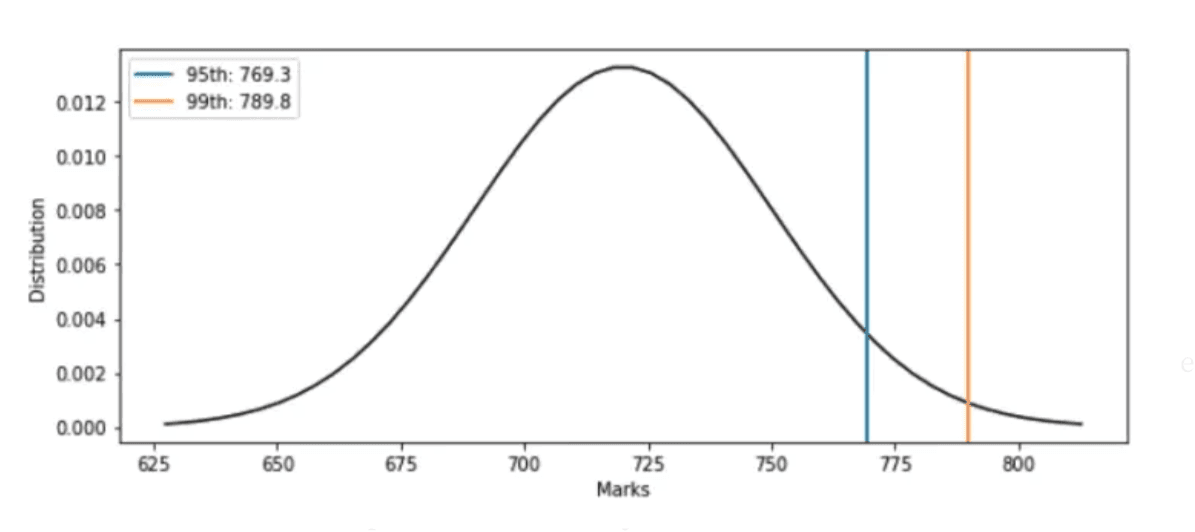

Latency distribution and p95/p99 latency

Two environments can look identical on paper and still feel radically different in production.

Why the same GPU SKU behaves differently

A GPU is not a standalone engine. It is one component in a much larger system. Effective throughput depends on:

CPU speed and NUMA layout

PCIe vs NVLink topology

Network fabric (Ethernet, InfiniBand, RoCE)

Storage paths and I/O contention

Software stack (drivers, CUDA, NCCL, runtimes)

Scheduling, batching, and isolation policies

The GPU defines a ceiling. The cluster determines how close you get to it. This is why “equivalent capacity” is rarely equivalent in practice.

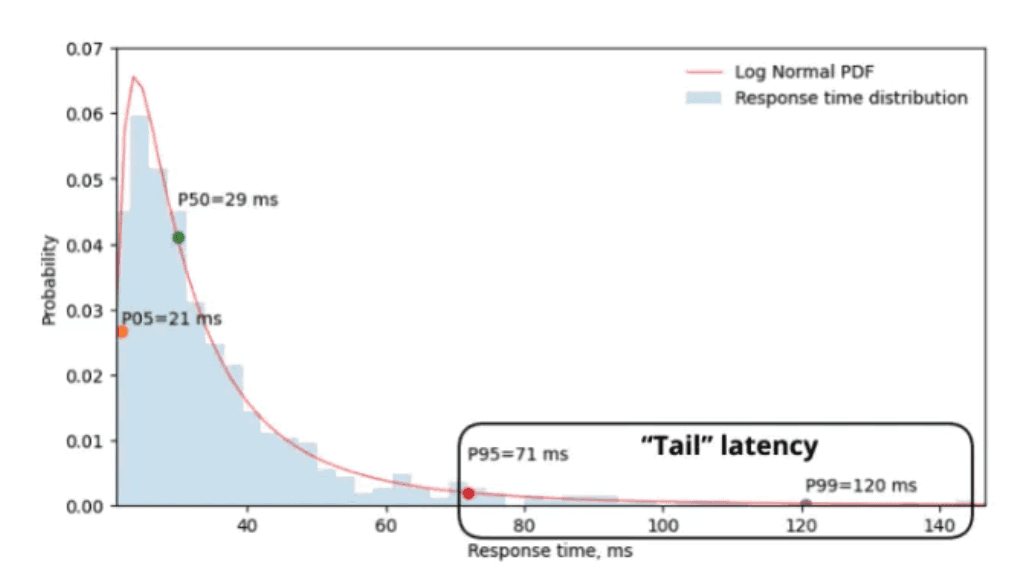

Variability doesn’t appear as failure — it appears as tails

Most systems don’t fail catastrophically. They fail statistically. Average latency or throughput can look healthy while:

1 in 20 requests is slow (p95)

1 in 100 requests is painfully slow (p99)

This is called tail latency.

Best-case behavior, typical case, slow-but-common case and worst-case user experience

Why tails matter more than averages

Users don’t experience averages. They experience the slow request. This is why performance engineers focus on:

p95, p99 latency

Worst-case behavior

Consistency, not just speed

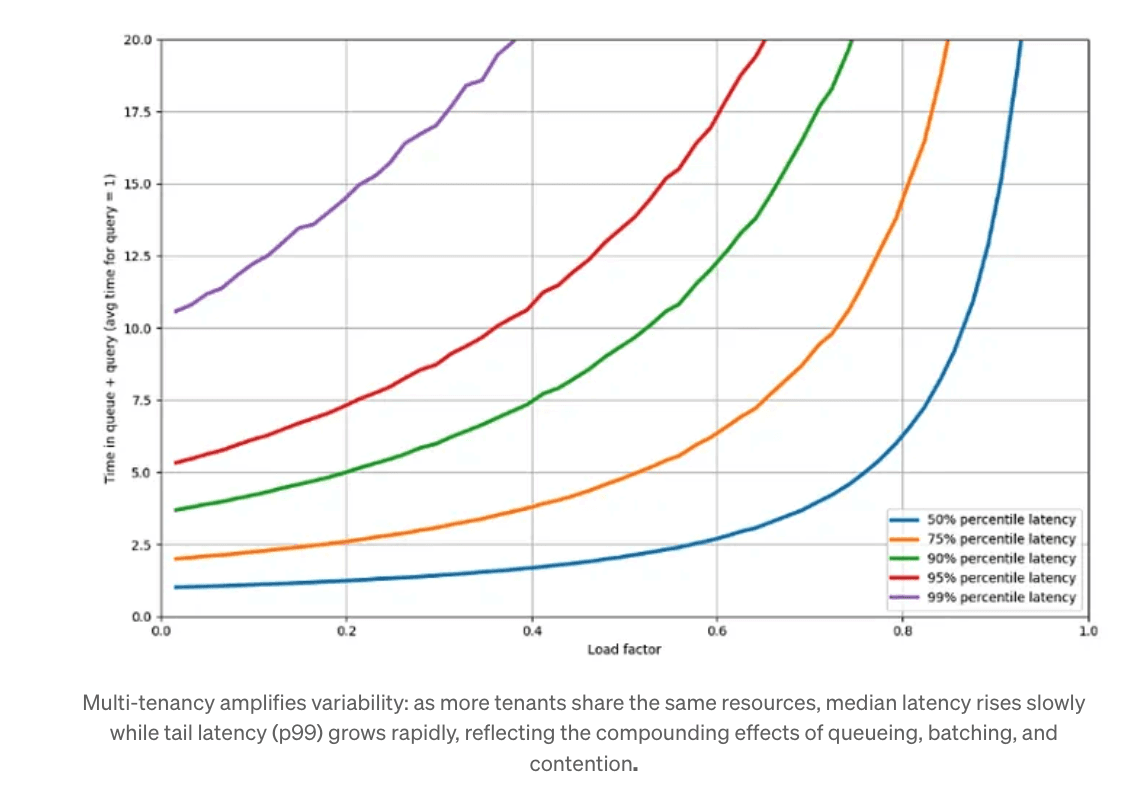

Concurrency makes variability explode

Press enter or click to view image in full size

Multi-tenancy amplifies variability: as more tenants share the same resources, median latency rises slowly while tail latency (p99) grows rapidly, reflecting the compounding effects of queueing, batching, and contention.

End-to-end latency is not just execution time:

End-to-end latency = waiting time + execution time

As concurrency increases:

Requests queue behind one another

GPUs batch work to maximize throughput

Waiting time dominates execution time

The result is a system where throughput remains high and average latency barely moves, even as p99 latency grows exponentially. This explains why two systems with identical GPUs and utilization can feel completely different under load.

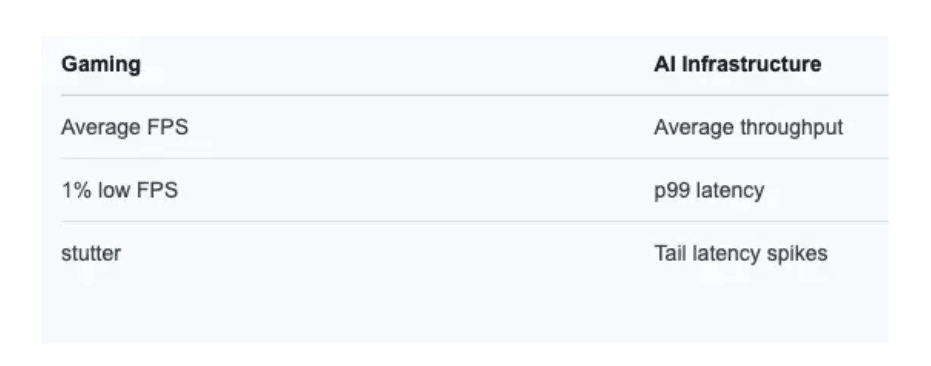

A useful analogy: Tom’s Hardware and “1% lows”

Performance variability isn’t unique to AI infrastructure. It’s well understood in other performance-sensitive domains. Tom’s Hardware has long emphasized two metrics in CPU and GPU benchmarks:

Geometric mean (geomean)

Instead of averaging raw scores, Tom’s Hardware uses geometric means to summarize performance across workloads. Why?

Performance differences are multiplicative (ratios), not additive

A single big win shouldn’t hide many small regressions

Geomeans preserve proportional truth

This mirrors real systems: a workload that is 2× faster in one case and 0.5× slower in another nets out to “no real improvement.”

1% low performance

Tom’s Hardware also reports 1% low FPS, not just average FPS. 1% low means:

The average performance of the slowest 1% of samples.

Two systems can have identical average FPS but wildly different 1% lows — and feel completely different to users. This maps directly to infrastructure:

Users feel the tail, not the mean.

Why abstraction collapses at scale

Platforms attempt to hide variability by abstraction:

“All GPUs of this class are interchangeable”

“This capacity behaves the same everywhere”

This works at a small scale mainly because humans absorb the variance. As workloads grow more complex and concurrency increases, hidden differences begin to leak through, performance becomes unpredictable, and exceptions start to pile up. This is abstraction collapse:

when variance exceeds tolerance and reality diverges too far from the simplified model a platform presents.

Variability is not the enemy — it’s information

Here’s the critical reframing:

Variability is signal, not noise.

It tells you:

Which configurations fit which workloads

Where bottlenecks actually are

Which tradeoffs are being made implicitly

The problem isn’t variability. The problem is that most systems don’t make intent explicit, so they can’t reason about it.

The role ParallelIQ plays

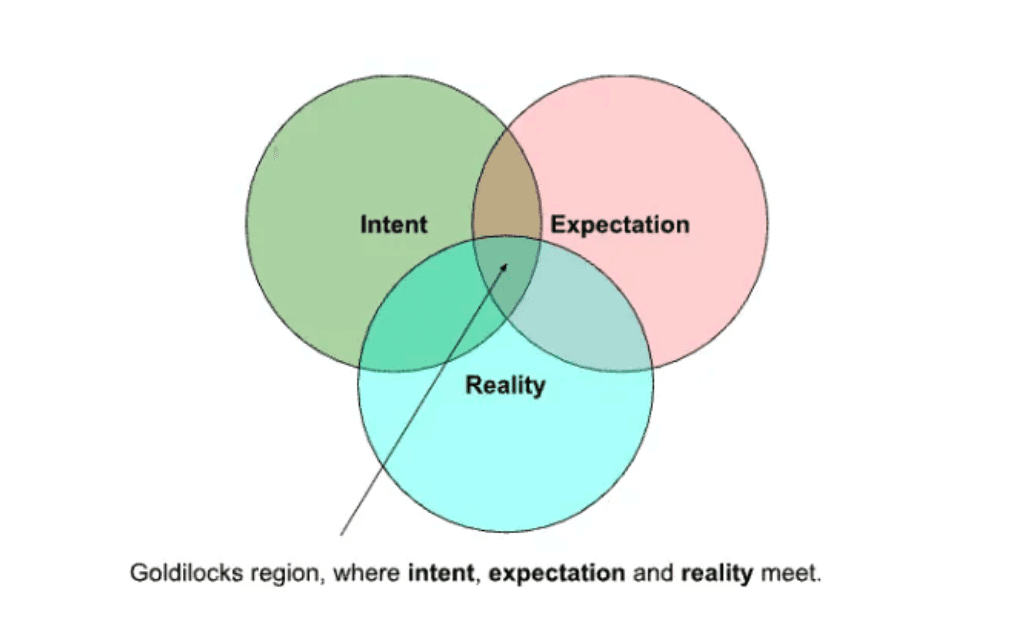

ParallelIQ exists to make variability understandable and actionable. Most systems only track Reality (metric) and only talk about Expectations. Very few systems encode Intent. Expectation is the mental model users, operators, or product teams have. Expectation is the most dangerous circle — because it feels obvious. Note that at small scale, the goldilocks region is large whereas at large scale, it shrinks rapidly.

ParallelIQ addresses variability by introducing a missing layer: intent. It also forces expectations to become visible and testable. Through explaining system behaviour, over time, expectations converge toward reality.

ParallelIQ addresses variability by introducing a missing layer: intent. It also forces expectations to become visible and testable. Through explaining system behaviour, over time, expectations converge toward reality.

ParallelIQ addresses variability by introducing a missing layer: intent. It also forces expectations to become visible and testable. Through explaining system behavior, over time, expectations converge toward reality.

1. Make intent explicit

ParallelIQ captures what actually matters:

Latency targets (p95 vs p99)

Throughput expectations

Cost sensitivity

Tolerance for batching and variability

Not as tribal knowledge — as structured input.

2. Compare intent to reality

By observing live systems, ParallelIQ:

Measures actual distributions, not averages

Detects drift and mismatch

Explains why behavior diverges

3. Constrain recommendations

ParallelIQ doesn’t predict performance deterministically. Instead, it asks:

“Given this intent, which realities are compatible — and which are not?”

That distinction makes recommendations credible.

From hiding variability → managing it

Once variability is explicit, systems can:

Route workloads based on fit, not labels

Offer tiers instead of pretending everything is equal

Price capacity based on behavior, not specs

Adapt configurations proactively

This is how variability becomes a design input, not a failure mode.

Why this matters now

As AI infrastructure shifts toward:

Multi-provider environments

Marketplaces and flexible contracts

Shared, highly concurrent systems

Trust will depend on how well platforms handle variability. The winners won’t be those with the most GPUs. They’ll be the ones who can say:

“This is what this capacity actually means — and why it’s right for your workload.”

That requires intent, visibility, and reconciliation. That’s the layer ParallelIQ is building.

If you take just one thing away from this piece:

Variability is inevitable in AI infrastructure — the real opportunity is to make it explicit, reason about it honestly, and design systems that work with it rather than pretending it doesn’t exist.