Horizontals

The Next Frontier of Trust: Why AI-Native Compliance Starts Where Cloud Compliance Ends

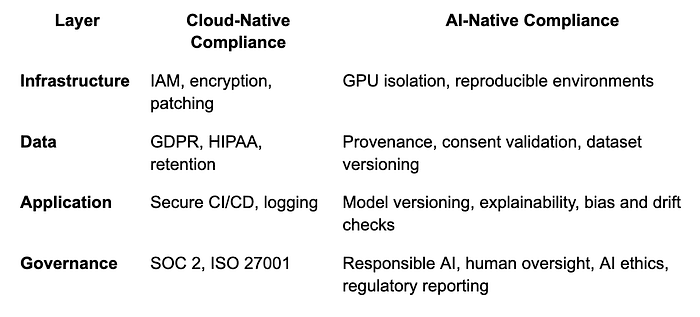

From Cloud-Native to AI-Native: How Compliance Has Been Redefined

For more than a decade, compliance was the quiet backbone of the cloud revolution. Frameworks like SOC 2, ISO 27001, HIPAA, and GDPR defined what it meant to run a secure and trustworthy business. They taught startups how to encrypt data, control access, and prove reliability to enterprise buyers.

In that world, compliance meant infrastructure hygiene: servers patched or not, logs audited or missing. Security and availability were binary states, and annual audits captured your operational maturity. The cloud transformed how software shipped — but software itself remained deterministic. It did what you programmed it to do.

Then AI arrived.

Startups no longer deploy static code; they deploy learning systems that adapt, generate, and evolve. Data changes daily. Models drift. Outcomes shift with every retraining cycle. The old compliance playbook — written for servers and scripts — can’t keep up with systems that think.

The cloud era made trust a certification.

The AI era makes trust a living system — observable, explainable, and provable.

This is the new compliance frontier: where governance moves from paperwork to pipelines, from policy to practice. And it’s where the next generation of AI-native companies will either earn enduring trust — or lose it before they scale.

The Cloud-Native Era: Compliance as Infrastructure Hygiene

Before AI reshaped technology, cloud-native compliance represented operational maturity. It assured customers that your product was secure, your infrastructure reliable, and your data protected — the ticket to enterprise trust. Its goal was simple:

Make your systems secure, auditable, and always available.

Core frameworks like SOC 2, ISO 27001, FedRAMP, and GDPR asked clear, binary questions:

Are your servers patched and monitored?

Is data encrypted at rest and in transit?

Do you log and secure administrative access?

Is your uptime tracked, with recovery plans in place?

Compliance mapped perfectly to deterministic software. You could configure systems, lock down access, and prove compliance with dashboards and audit reports. It became part of DevOps culture — monitoring as code, least privilege, automated checks.

But as machine learning entered the stack, security was no longer enough. Companies now had to answer:

What data trained your model?

Can you reproduce its results?

Does it behave fairly and consistently?

These are behavioral questions, not infrastructure ones — exposing the limits of frameworks built for static systems.

Cloud-native compliance let you trust your environment.

AI-native compliance ensures you can trust your outcomes.

The AI-Native Era: Compliance as Explainability and Accountability

As AI moved to the center of products, the compliance stack had to evolve. The question is no longer “Is your system secure?” but “Can you prove how it learned, what it used, and why it decided?”

Press enter or click to view image in full size

Each new layer introduces new primitives:

Data provenance — trace every input to its lawful source.

Model lineage — document how models were trained and validated.

Explainability — clarify why systems make decisions.

Governance — connect observability to executive oversight.

Compliance is no longer a checkbox — it’s a feedback loop. It must operate as continuously as your data pipelines and evolve as fast as your models.

Cloud compliance ended at infrastructure.

AI compliance begins at intelligence.

Why Traditional Compliance Fails in AI

Cloud-era frameworks were built for static, human-authored systems. They measured encryption, uptime, and access — but never why decisions changed or how outputs evolved.

They fail in AI for four reasons:

Static Controls, Dynamic Systems — Annual audits can’t capture models that retrain weekly.

No Behavioral Visibility — Logs show what happened, not why.

Opaque Supply Chains — Open models and data vendors introduce unseen risks.

Missing Ethical Oversight — Bias, fairness, and explainability weren’t part of legacy standards.

You can’t audit yesterday’s systems to understand tomorrow’s models.

Compliance is now continuous governance — merging engineering, data, and ethics into a live feedback loop.

The Pillars of AI-Native Compliance

AI-native compliance rests on three pillars:

Traceability — Know where everything comes from.

Every dataset and model version must be traceable back to its origin, code, and environment. It turns audits into replayable records.Explainability — Know why decisions are made.

Understanding influence factors and performance consistency is now a regulatory and ethical necessity.Governance Automation — Make compliance continuous.

Policies like “no model trains on unconsented data” or “bias metrics must stay below X” should be enforced in code, not paperwork.

Traceability tracks origins.

Explainability clarifies behavior.

Governance automation enforces integrity.

That’s Compliance-as-Infrastructure — where trust is engineered, not asserted.

The Emerging Standards

Regulators are now encoding these expectations into law:

EU AI Act: Defines AI risk tiers and mandates documentation, oversight, and audit trails.

NIST AI RMF: A U.S. framework for governing, mapping, and managing AI risk through transparency and fairness.

ISO/IEC 42001: The first AI-specific management system standard, extending ISO 27001 into Responsible AI.

Despite regional differences, all share the same principles: traceability, transparency, accountability. The question has shifted from “Are we secure?” to “Are we responsible?”

Compliance Isn’t One-Size-Fits-All

While the foundations of AI compliance are universal — governance, data integrity, transparency, fairness, and security — every industry adds its own layer of regulation.

Healthcare must meet FDA SaMD and Good Machine Learning Practice (GMLP) requirements. Finance operates under fair-lending laws, model governance standards, and audit trails demanded by OCC and Basel III. Real estate, retail, and government each carry their own ethical, consumer, and privacy expectations.

At ParallelIQ, we approach this through a modular compliance framework: A core checklist of 30 universal controls, augmented by vertical-specific extensions.

Compliance isn’t static — it adapts with your domain.

This approach keeps AI systems consistently trustworthy across contexts — whether they’re diagnosing disease, underwriting loans, or pricing properties.

AI-native compliance starts with universal principles, but true readiness means knowing your industry’s language of trust.

ParallelIQ: Making Compliance Operational

At ParallelIQ, we make compliance part of the runtime. Our 30-point AI Compliance Readiness Inspection aligns data handling, observability, and governance with emerging global standards.

We turn policies into pipelines and audits into automation:

Automatic evidence — lineage, access logs, fairness metrics.

Runtime enforcement — compliance rules embedded in your AI stack.

Continuous readiness — always audit-ready, not audit-anxious.

In the AI-native world, trust is infrastructure — and compliance is how you prove it.

Trust Is the New Infrastructure

The cloud era taught us to scale; the AI era will teach us to be accountable. In a world run by learning systems, trust has become the new measure of performance.

AI must not only perform — it must prove. Those who can demonstrate responsible, transparent, and traceable systems will own the next decade.

At ParallelIQ, we help startups build that foundation: from GPU observability and data lineage tracking to automated compliance evidence and Responsible AI governance.

Cloud-native made systems fast.

AI-native makes them wise.

→ Learn how ParallelIQ’s 30-point AI Compliance Readiness Inspection helps startups operationalize trust — and scale responsibly. [here]