AI/ML Model Operations

The Hidden Costs of Manual Inference Services: Why Model Deployment Still Feels Like a Ticket Queue

Introduction — The State of Model Deployment Today

Training a model has never been easier. Serving it? That’s still an adventure.

Across startups and enterprises alike, most AI teams still rely on manual inference services — a patchwork of tickets, Slack threads, and YAML files. The process works just well enough to deploy one or two models, but the moment the organization scales, it collapses under its own weight.

In theory, inference should be a push-button operation. In practice, it’s a human workflow that depends on tribal knowledge, individual heroics, and endless coordination between model owners and infrastructure teams.

Manual inference services are the hidden tax of modern AI operations.

The Manual Workflow — How It Actually Works

Let’s walk through what happens when a data scientist finishes a new model and wants it served in production.

Request intake — The model owner opens a ticket or emails the infra team: “Please deploy sentiment-v3 on 3 GPUs in the EU region.”

Validation — The infra engineer checks the model size, dependencies, and runtime framework. Often, this involves reading through notebooks or guessing the required Python packages.

Packaging — A Dockerfile is written by hand (or copied from a previous project), dependencies installed, and an image is pushed to the registry.

Resource allocation — GPUs are reserved manually — through a Slack request, a spreadsheet, or sometimes just an ad-hoc shell command.

Deployment — YAML manifests are applied manually to Kubernetes or a managed service, usually adapted from a previous deployment.

Smoke testing — Someone runs curl or ab (ApacheBench) commands to check if the endpoint responds.

Monitoring — Metrics dashboards are created manually in Grafana or Prometheus, if at all.

Scaling or rollback — When latency spikes or errors appear, someone SSHs into a node or scales pods by hand.

This entire process can take anywhere from a few hours to several days, depending on who’s available, which scripts still work, and how many approvals are needed.

“Every model deployment feels like a one-off project — not a repeatable process.”

The Artifacts and Hand-Offs

Each deployment involves a growing collection of artifacts:

Model weights (.pt, .onnx, .pkl, etc.)

Dockerfiles and container images

Kubernetes YAMLs and environment variables

Configuration spreadsheets

Ad-hoc scripts for health checks

Slack threads and emails for approval

Monitoring dashboards created manually

These artifacts live in different silos — Git, Slack, Google Drive, and people’s laptops. As a result:

Dependencies get out of sync.

Old manifests get reused accidentally.

Credentials are copied between projects.

No one knows which version is actually running in production.

When the next model comes along, the cycle repeats.

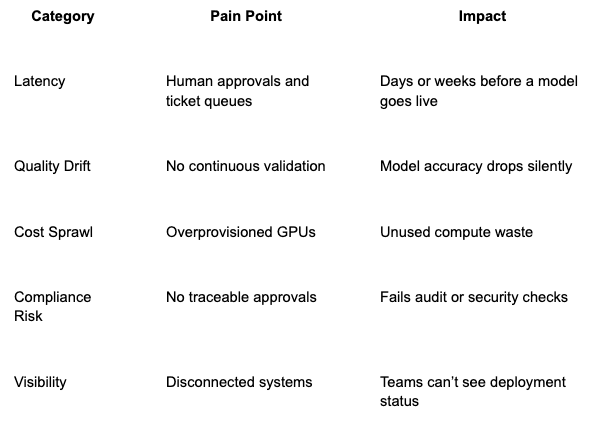

The Operational Pain Points

Every team knows this pain: infra is firefighting, data scientists are waiting, and leadership wonders why “serving models” is slower than training them.

Why It Persists

So why do so many teams still operate like this?

Training matured faster than serving. Frameworks like PyTorch and TensorFlow made model building easy — but serving infrastructure lagged behind.

Every model is different. NLP, vision, tabular — each has its own dependencies, data types, and scaling patterns.

Infra teams prioritize uptime over abstraction. When your primary goal is “keep it running,” there’s little time to build automation.

No standard lifecycle for inference exists. CI/CD pipelines evolved for code, not for models that depend on data, hardware, and validation metrics.

Manual processes persist because they “work” — until the number of models, teams, or customers grows. Then, they become the bottleneck.

The Hidden Costs

The visible cost is time, but the hidden costs run deeper.

🧠 Engineering Overhead

Highly skilled engineers end up doing repetitive, mechanical work — copying YAMLs, adjusting manifests, rerunning the same smoke tests. It’s toil, not leverage.

💸 Resource Waste

Without automated scaling and telemetry, GPU clusters are often overprovisioned. Idle resources burn thousands of dollars per month because no one’s watching utilization in real time.

🔒 Audit and Compliance Friction

When auditors ask, “Who approved this deployment?” or “Which model version was live on June 10th?” — the answers live in Slack or memory, not logs.

⚙️ Model Underperformance

Without automated evaluation or drift detection, models degrade quietly. By the time issues are noticed, weeks of bad predictions may have already impacted users or business metrics.

🧯 Team Burnout

Manual inference ops turn talented engineers into process managers — fighting fires, not improving the system. It’s hard to innovate when you’re always catching up.

What “Good” Would Look Like

Imagine a world where:

Model owners submit a deployment request through a portal — no tickets, no Slack threads.

Policies, quotas, and approvals are enforced automatically.

Packaging, validation, and deployment happen through defined workflows.

Monitoring and retraining are built in, not afterthoughts.

The entire lifecycle is visible, versioned, and auditable.

That’s what modern inference infrastructure looks like — one where human workflows are replaced with orchestrated, policy-aware automation.

“If CI/CD standardized code deployment, inference orchestration will standardize model deployment.”

Conclusion — Manual Doesn’t Scale

Manual inference operations were fine when teams had one or two models. But as companies deploy dozens — across geographies, frameworks, and hardware — the manual approach becomes a liability.

The cost isn’t just time. It’s opportunity, predictability, and trust.

As AI infrastructure matures, inference will follow the same arc that DevOps did: from tickets → scripts → pipelines → orchestration.

In our future articles, we’ll explore how BPMN-based orchestration turns today’s manual, ticket-driven workflows into automated, auditable lifecycles — enabling true self-service inference at scale.

Call to Action

ParallelIQ helps AI teams turn manual inference operations into automated, auditable workflows — cutting deployment time by 70% and GPU costs by up to 40%. For more information reach out to us here.