AI/ML Model Operations

The Hidden Backbone of AI: Building an Inference Service That Scales

The Hidden Backbone of AI

Every AI model eventually meets its moment of truth — when it leaves the lab and starts serving real users. That moment is powered by one thing: inference.

While training gets the attention (GPUs, data, and model breakthroughs), inference is the invisible backbone that turns intelligence into business value. It’s the layer that makes a model useful — the runtime that receives a request, executes it in milliseconds, and sends a result that might drive a diagnosis, a recommendation, or a fraud decision.

Yet, most AI organizations still treat inference as an afterthought. Models are trained with precision but deployed with friction — via tickets, manual scripts, and one-off containers. The result? Slow launches, unpredictable costs, compliance risks, and missed opportunities for optimization.

The Manual Reality

As we discussed in a previous blog, many AI companies today have Inference Service teams buried under operational debt. When a model owner asks, “Can you deploy this?”, a long and error-prone journey begins:

Intake: The team gathers model details — framework, weights, dependencies, hardware needs.

Packaging: Engineers manually build containers, sometimes pulling assets from personal machines.

Deployment: Someone provisions GPUs, configures ingress, pushes YAMLs, and runs smoke tests.

Monitoring: Dashboards are stitched together after the fact; cost data comes weeks later.

Decommission: Old models linger, consuming GPU hours indefinitely.

It works — but it doesn’t scale. Every deployment becomes a bespoke project. The missing piece is a platform that can take those manual steps and make them declarative, repeatable, and observable.

What an Inference Service Really Is

An inference service isn’t just a container that runs a model — it’s a system of systems. Think of it as the operating system of AI inference, with three key layers:

Unified API Surface — A consistent interface for text, vision, and speech models (often OpenAI-compatible).

Runtime Abstraction Layer — Bridges model code with optimized serving engines such as vLLM, TensorRT-LLM, or Triton, handling batching, caching, and resource scheduling.

Operational Backbone — The control plane that enforces scaling, quotas, cost visibility, and compliance.

If AI training is the factory floor, the inference service is the logistics network — reliable, auditable, and fast.

Inside the Architecture: Control Plane vs. Data Plane

A scalable inference platform separates intent from execution.

Control Plane: Model registry, deployment policies, routing, authentication, and observability dashboards. It decides what should run, where, and under which constraints.

Data Plane: Model servers (vLLM, Triton, ONNX), caching layers, batching logic, and GPU schedulers. It handles the actual execution and streams metrics back to the control plane.

This decoupling enables self-serve workflows: model owners declare intent, while infrastructure enforces policy.

The Role of GitOps and Reproducibility

Most manual teams depend on tribal knowledge — whoever remembers the right kubectl command. GitOps replaces that fragility with auditable automation.

All deployment configurations live in Git. A change (e.g., new model version) triggers a pipeline; a controller such as ArgoCD or GitLab’s Kubernetes agent reconciles the cluster to match Git state. Benefits:

Traceability: every deployment and rollback is recorded.

Reproducibility: dev, staging, and prod stay consistent.

Compliance: approvals happen through pull requests, not Slack messages.

Git becomes the source of truth for the inference layer.

MLflow and GitLab: The Backbone of Automation

Two tools anchor the modern inference lifecycle.

MLflow manages the what: experiment tracking, lineage, and model registry.

Each trained model, metrics, and artifact is versioned.

Stage transitions (Staging → Production) trigger validations and policies.

It preserves reproducibility and evaluation context.

GitLab manages the how: CI/CD, policy, and GitOps.

Builds serving images (Docker or Truss), runs tests and scans, pushes to the container registry.

Updates deployment manifests automatically through merge requests.

Enforces approvals and gives complete audit trails.

Together they form a closed loop:

A model promoted in MLflow triggers GitLab pipelines, which deploy the right container into production under GitOps control.

This transforms deployments from ticket requests into governed, observable workflows.

From Manual Ops to Self-Serve Platforms

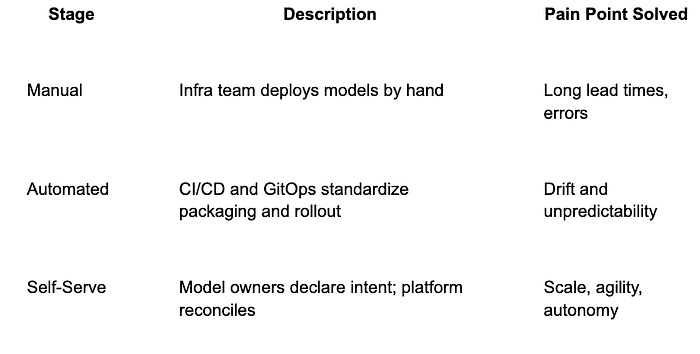

The evolution usually follows three stages:

Press enter or click to view image in full size

Key building blocks for this transition:

Truss or internal templates for consistent model packaging.

ArgoCD or GitLab CI for automated rollout.

Unified manifests (ModelDeployment CRDs) for declaring resources.

RBAC and quotas for governance.

In the self-serve world, infrastructure enforces guardrails — not gates.

Observability, Cost, and Predictive Orchestration

You can’t optimize what you can’t see. A mature inference layer collects:

Metrics: latency, tokens/sec, cache hit rates, GPU utilization.

Traces: end-to-end flow from request → model → response.

Cost telemetry: per-tenant GPU-hour, per-token, and per-endpoint metering.

With those signals, you move from reactive to predictive orchestration — forecasting traffic, pre-warming models before bursts, and throttling low-priority workloads during peaks. It’s the difference between scaling when you must and scaling when you should.

Security and Compliance as First-Class Citizens

Enterprise adoption depends on trust. Inference services must respect data boundaries and governance policies:

VPC isolation and private links

Customer-managed keys and encrypted logs

Region pinning for data residency

Signed artifacts and audit trails

Automatic redaction of prompts and outputs containing PII

When compliance is baked in rather than bolted on, AI teams can ship faster and sleep better.

The Future Platform

The next generation of inference platforms will look like this:

Model owners declare intent via API or UI.

The control plane validates policy and triggers CI/CD automatically.

Runtime plane auto-optimizes for latency, cost, and load.

Telemetry streams back for analytics, forecasting, and billing.

MLflow handles lineage and model promotion; GitLab enforces governance.

Predictive models continuously adjust scaling and resource allocation.

Inference will evolve from a service to a self-aware, self-healing infrastructure layer — consistent across on-prem, hyperscaler, and hybrid environments.

Lessons from the Field

Standardization beats heroics. Reusable packaging and manifests cut onboarding time dramatically.

GitOps is non-negotiable. It’s your compliance and rollback mechanism.

Predictive scaling pays for itself. Right-sizing GPUs through telemetry reduces costs by 30–40%.

Model lineage matters. MLflow’s registry gives traceability that auditors love.

Conclusion — The Hidden Backbone Revealed

Inference is no longer an afterthought. It’s where reliability, cost, and trust converge. A scalable inference service turns models into products, experimentation into operations, and infrastructure into intelligence.

At ParallelIQ, we believe the future of AI infrastructure is predictive, compliant, and cost-aware — a platform that anticipates demand, respects boundaries, and keeps every GPU hour accountable.

Training builds intelligence. Inference delivers it.