AI/ML Model Operations

Setting the Foundation — Why DevOps Must Evolve

The Shift No One Can Ignore

For more than a decade, DevOps has been the invisible engine of software velocity — the reason companies can ship features weekly instead of yearly. But what happens when the thing you’re deploying is learning?

Traditional DevOps was designed for code: deterministic, testable, repeatable. Yet AI has introduced an entirely new species of software — one that adapts, evolves, and sometimes behaves unpredictably. Pipelines that were once optimized for speed now collide with uncertainty.

We’re entering an era where DevOps must evolve — from managing releases to managing intelligence.

DevOps: Built for Code, Not for Models

Let’s remind ourselves why DevOps became so powerful:

Continuous Integration / Continuous Delivery (CI/CD): faster iteration loops

Automation: repeatable deployments across environments

Infrastructure as Code: reproducible infrastructure setups

Feedback loops: detect failures early, recover fast

These principles transformed how we ship applications. But they assume one crucial thing: the artifact — the code — always behaves the same way.

AI breaks that assumption.

In classic DevOps, your artifact is a binary. In AI, your artifact is a probability distribution.

Where the Old Model Breaks

As AI systems moved into production, the cracks began to show:

Non-determinism: Train a model twice with the same data and code — you’ll still get different weights and slightly different results.

Data dependency: Success now depends as much on which data was used as on what code was written.

Hardware complexity: GPUs, TPUs, and specialized accelerators are not plug-and-play — they need orchestration and cost control.

Lifecycle drift: Models decay over time as real-world data shifts. Your best model today might underperform next month.

These challenges aren’t bugs in DevOps — they’re signs that the paradigm itself needs to expand.

The New Pillars of DevOps in the AI Age

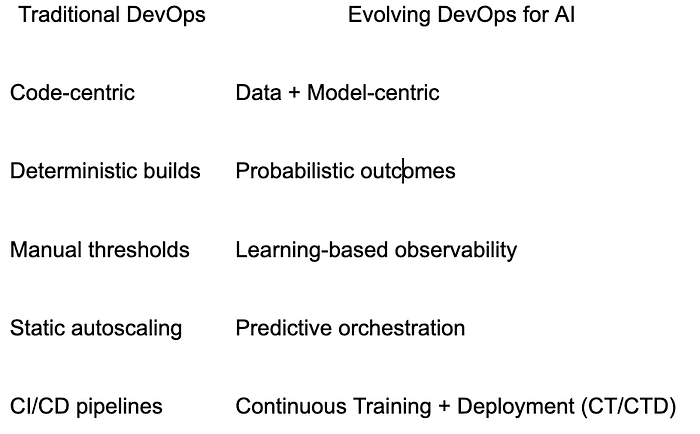

To stay relevant, DevOps must evolve across several dimensions:

Press enter or click to view image in full size

This evolution doesn’t replace DevOps — it extends it. We’re moving from pipelines that deploy code to systems that deploy intelligence.

The Rise of New Disciplines

The ecosystem is already reacting to this shift:

DataOps ensures versioned, high-quality datasets.

MLOps automates model training and validation.

ModelOps governs deployment, rollback, and monitoring of models.

AIOps uses AI itself to optimize infrastructure operations.

Each emerged to patch one piece of the gap DevOps left open. But in reality, they’re all converging back into a single unified vision — Intelligent DevOps — a discipline that brings automation, intelligence, and adaptivity under one roof.

Toward Intelligent Infrastructure

Imagine pipelines that anticipate model drift before it affects production. Imagine GPU clusters that scale predictively, not reactively. Imagine observability systems that not only detect anomalies but understand them. And imagine compliance frameworks woven into those same pipelines — continuously auditing bias, data lineage, privacy, and latency budgets without manual intervention.

That’s the future we’re moving toward — infrastructure that learns.

The future of DevOps isn’t about shipping code faster. It’s about shipping intelligence responsibly.

What Comes Next

This post sets the foundation for a new conversation: what DevOps becomes when your product is an evolving model.

In the next article of this series, we’ll dive deeper into why traditional CI/CD pipelines collapse under AI workloads, and how we can rethink them for continuous learning.

If your organization is rethinking how DevOps should evolve for AI-driven workloads, reach out to ParallelIQ for an invitation to our upcoming sessions on AI-ready infrastructure and predictive orchestration.

#DevOps #AIOps #MLOps #Infrastructure #ParallelIQ #AIInfrastructure #PredictiveOrchestration #ModelOps