AI/ML Model Operations

Cloud-Native Had Kubernetes. AI-Native Needs ModelSpec

For anyone who lived through the rise of cloud-native, the pattern unfolding in AI today feels familiar.

Before Kubernetes, every team packaged, configured, and deployed applications in their own way. YAMLs lived in random repos, operators hard-coded logic into scripts, and scaling or routing decisions were tribal knowledge encoded in someone’s head. Cloud-native only took off when the community agreed on a shared description of how applications should run.

That description — the Kubernetes manifest — became the interface that unlocked the entire ecosystem.

Today, AI is missing that layer.

AI Is Still Pre-Standardization — and It Shows

Every modern ML team asks the same questions:

Which GPU should I run this model on?

What batch size will avoid OOM but still maximize throughput?

How do I express latency budgets, token limits, or routing rules?

How should I handle replicas, autoscaling, or warm pools?

Where do I capture the nuances of a fine-tuned model?

Right now, each answer ends up scattered across:

a Python file

a Helm chart

an inference-service YAML

a config map

tribal knowledge

Slack threads

It’s exactly where cloud-native was in 2013: lots of powerful tools, but no shared language tying them together.

Cloud-Native’s Breakthrough Was Declarative Description

Containers weren’t enough. Kubernetes wasn’t enough. The turning point was a specification:

You declare what the system should run

The platform determines how to run it

Automation becomes safe and repeatable

Community tools converge on a common interface

The ecosystem blossomed because everyone spoke YAML in the same way. That is precisely what AI is missing.

There is no consistent, community-agreed way to describe:

A model’s identity

Its resource envelope

Its latency or cost constraints

Its inference behavior

Its routing rules

Its safety and compliance posture

Its operational SLOs and monitoring expectations

Every organization invents its own version — and the fragmentation costs millions in GPU waste, operational complexity, and engineering effort.

**What Kubernetes Manifests Were for Cloud-Native… ModelSpec Can Become for AI-Native**

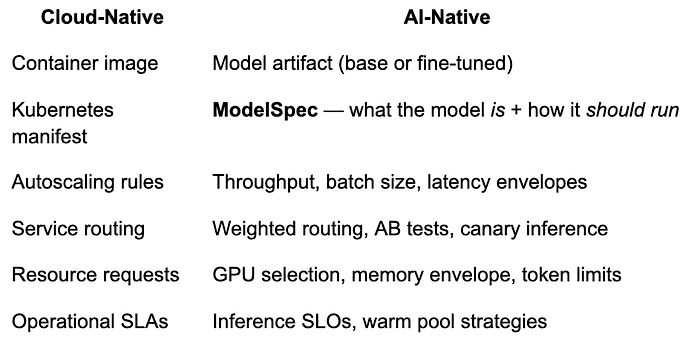

This is the core analogy:

Press enter or click to view image in full size

Cloud-native standardized the way applications were described. AI-native now needs a standard way to describe a model — not just its weights, but its operational behavior.

What ModelSpec Is Not

Because it’s important to avoid confusion:

It’s not an inference runtime (vLLM, TGI, Triton, etc.)

It’s not a framework (PyTorch, TensorFlow, JAX)

It’s not a serving platform (SageMaker, Baseten, Ray Serve)

It’s not a model format (ONNX, GGUF, Safetensors)

ModelSpec sits above all of them.

Just like Kubernetes manifests describe desired state, a ModelSpec describes:

resource constraints

performance expectations

cost envelope

routing logic

pre-processing and post-processing behaviors

model lineage and fine-tuning provenance

inference-specific SLOs

compliance and safety settings

SLO and monitoring requirements

It becomes the contract between model authors, infra teams, and the AI runtime.

Why AI-Native Suddenly Needs This Layer

Because the old assumptions no longer scale:

1. Models now shape infrastructure

Cloud-native was infra → app.

AI-native is app → infra.

The model dictates:

GPU type

batch sizes

memory behavior

throughput ceilings

scaling decisions

This inversion demands a formal description.

2. Costs are too high for guesswork

Every bad batch-size experiment burns GPU dollars. Every OOM forces developers to restart the cycle. A specification allows analysis before running.

3. Fine-tuning breaks assumptions

A fine-tuned model behaves differently than its base. Without a spec layer, every deployment becomes a surprise.

4. Automation requires structure

Autoscaling, routing, SLO enforcement, and drift detection all depend on structured metadata — not scattered configs.

ModelSpec (Coming Soon)

For the last several months, I’ve been working on a community-friendly ModelSpec: a declarative description of model identity, resource envelope, performance expectations, and operational constraints — the missing interface for AI-native infrastructure.

It’s not a product announcement.

It’s not a framework.

It’s a specification, the same way cloud-native had one.

In upcoming posts, I’ll:

share early examples

walk through how ModelSpec integrates with existing runtimes

show how it unlocks predictive orchestration, better autoscaling, and safer deployments

invite design partners to contribute ideas before the spec is finalized

Closing Thought

Cloud-native reached escape velocity only when the ecosystem aligned around a shared description of applications.

AI-native will require the same — and ModelSpec is a step toward that future.

Follow along as we open up the design, share early drafts, and invite the community to shape the standard.