AI/ML Model Operations

Bare-Metal GPU Stacks: The Hidden Alternative to Hyperscalers

Introduction: Why Bare-Metal GPU Stacks Are Surging

AI workloads are exploding, and so are the bills. Mid-market companies and startups often begin their journey on hyperscalers, cushioned by credits and a rich managed ecosystem. But once credits expire, the reality sets in: training large models on the cloud can cost millions annually, with little control over performance tuning or GPU utilization.

This is why bare-metal GPU stacks are surging in popularity. Instead of renting GPUs behind layers of virtualization and abstraction, bare-metal gives teams direct access to raw GPU servers — no hypervisor tax, no noisy neighbors, no hidden scheduling delays. For compute-heavy AI training and latency-sensitive inference, those differences translate into both faster performance and lower costs.

Startups chasing runway and mid-market firms squeezed by cloud margins are both arriving at the same conclusion: the hyperscaler premium doesn’t scale. Bare-metal GPU providers, often via VPS-style offerings, are emerging as the smart alternative — offering the same NVIDIA H100s or A100s, but at a fraction of the price and with more predictable performance.

But with greater control comes greater responsibility. Bare-metal shifts the burden of orchestration, scheduling, and monitoring back onto the customer. The organizations that succeed will be those that balance the cost savings of bare-metal with the operational discipline of AI DevOps.

Top VPS GPU providers in 2025 span three main categories.

Major clouds like AWS, Google Cloud, and Azure offer extensive managed services and integrations — but often at the highest cost.

Specialized AI/ML providers such as Lambda Labs, RunPod, Paperspace, CoreWeave, Vast.ai and newer players like Fluidstack, Nebius, Together AI and Civo deliver raw GPU power, Kubernetes-native orchestration, and pricing models tuned for training and inference workloads.

Cost-conscious GPU providers — options like Vultr (GPU plans), Vast.ai, and Genesis Cloud focus on affordability and transparency, making them attractive for startups looking to stretch budgets.

The best choice depends on your priorities: features and ecosystem (hyperscalers), raw AI/ML performance (specialized providers), or value-for-money (budget clouds). We’ll dive deeper into specific providers like AWS, Lambda Labs, CoreWeave, Fluidstack, and Civo — and their business models — in an upcoming post.

What “Bare-Metal GPU” Actually Means (Technical Primer)

When we talk about bare-metal GPUs, we’re really talking about the entire stack that sits between the silicon and your AI workloads. Unlike hyperscalers, which add multiple layers of abstraction, bare-metal setups expose the hardware directly — giving engineers fine-grained control, but also placing more responsibility on them.

Think of it in layers:

1. Hardware Layer

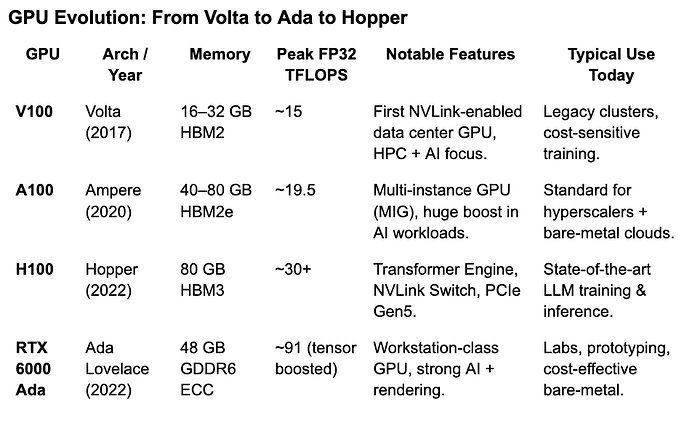

NVIDIA A100/H100, RTX 6000 Ada, or older V100s.

Memory bandwidth (HBM3 vs GDDR6) and GPU interconnects (PCIe, NVLink, NVSwitch).

Networking fabric: InfiniBand or RoCEv2 for multi-node training.

Press enter or click to view image in full size

2. Orchestration Layer

Cluster managers like Slurm, Kubernetes, or Ray.

GPU scheduling policies (gang scheduling, time-slicing, preemption).

Container runtime and driver management (CUDA, NCCL).

3. Workload Layer

AI frameworks: PyTorch, TensorFlow, JAX.

Distributed training with NCCL / Horovod.

Inference stacks: NVIDIA Triton, KServe, TorchServe.

This is what makes bare-metal attractive: you’re not paying for the hyperscaler’s virtualization tax, and you can tune each layer for maximum throughput. But it also means you’re responsible for aligning CUDA with the right drivers, optimizing NCCL for your network, and making sure schedulers don’t leave GPUs idle.

Technical Advantage (Training Workloads)

Why are teams moving to bare-metal? Beyond cost, the technical edge is often decisive:

🚀 Performance

With direct access to GPUs, there’s no virtualization tax.

NVLink and NVSwitch interconnects enable high-bandwidth, low-latency communication between GPUs in the same server.

For large-scale training jobs (transformers, diffusion models), this means faster convergence and better scaling efficiency.

📏 Predictability

In hyperscalers, workloads can be impacted by “noisy neighbors” — other tenants sharing underlying hardware.

Bare-metal eliminates this: your GPUs are yours alone, which means consistent performance across runs.

This predictability is critical for benchmarking models, running hyperparameter sweeps, or managing tight iteration cycles.

🌐 Networking

Many bare-metal providers wire their clusters with InfiniBand or RoCEv2 (RDMA over Converged Ethernet).

These high-performance fabrics cut communication overhead for distributed training, where NCCL all-reduce operations can otherwise become the bottleneck.

The result: higher scaling efficiency as you grow from a few GPUs to hundreds.

In short: bare-metal GPU stacks unlock the full potential of modern accelerators. They provide raw speed, consistent behavior, and the network backbone required for state-of-the-art training workloads.

Technical Challenges of Bare-Metal GPU Stacks (Training Workloads)

Bare-metal isn’t a free lunch. The very control that makes it attractive also shifts operational burden onto the customer. Key challenges include:

⚙️ Driver & Framework Management

On hyperscalers, CUDA, cuDNN, and NCCL are pre-integrated with the platform.

On bare-metal, you own version alignment. A mismatched CUDA/NCCL setup can cause jobs to stall or scale poorly across nodes.

🖥️ Orchestration Complexity

Services like SageMaker or Vertex AI abstract away cluster scheduling.

With bare-metal, you must configure Kubernetes, Slurm, or Ray yourself — tuning gang scheduling, time-slicing, and preemption policies to avoid idle GPUs.

🔍 Debugging & Monitoring

In managed clouds, you get built-in dashboards and logs.

Bare-metal requires setting up observability stacks (Prometheus, Grafana, OpenTelemetry) and workload monitoring (Evidently, Arize, Fiddler) on your own.

📦 Operational Overhead

Node pool scaling, driver patching, container runtime updates, and CI/CD pipelines all need in-house ops discipline.

Without strong processes, clusters drift into “snowflake” states that are brittle and expensive to maintain.

📉 Vendor Fragmentation

Not all VPS/bare-metal providers are equal. Some offer InfiniBand and high-bandwidth networking, others don’t.

Picking the wrong vendor can negate the technical advantages.

In short: bare-metal stacks trade managed convenience for control. The winners are the teams that can pair strong AI/DevOps skills with observability and scheduling discipline.

Inference-Specific Advantages of Bare-Metal GPU Stacks

⚡ Ultra-Low Latency

No hypervisor layer = fewer context switches and less jitter.

This matters for real-time inference: fraud detection, personalized recommendations, conversational AI.

📈 Predictable Throughput

With dedicated GPUs, you avoid noisy neighbor effects that can spike response times.

Consistent P95/P99 latencies make it easier to meet SLAs.

🌐 Edge & Hybrid Deployments

Bare-metal GPUs can be colocated closer to users (edge data centers).

This reduces network hops vs. routing everything through hyperscaler regions.

Inference-Specific Challenges of Bare-Metal GPU Stacks

⚡ Cold Starts & Scaling

Hyperscalers: Offer serverless inference endpoints and auto-scaling GPU pools. Cold starts still exist, but the burden of scaling up and down is mostly abstracted away. For many teams, this means faster iteration but also less visibility into cost and utilization.

Bare-Metal / VPS: No built-in serverless layer. To handle unpredictable traffic or bursts, teams often pre-warm GPUs (keeping them spun up and idle) or overprovision capacity. This ensures low latency but drives up idle costs. Without smart workload schedulers and observability, those “insurance GPUs” become silent budget drains.

👉 The trade-off: Hyperscalers simplify scaling but at a premium. Bare-metal delivers control and cost efficiency, but only if you build the scheduling discipline to match workload patterns.

🛠️ Serving Infrastructure Setup

You must deploy and maintain serving stacks yourself (e.g., NVIDIA Triton, KServe, TorchServe).

Rollouts, rollbacks, and blue/green deployments aren’t turnkey — they need CI/CD discipline.

📉 Model Monitoring

Inference quality can silently degrade (drift, bias, data quality issues).

Hyperscaler platforms often bundle monitoring; bare-metal customers must stitch together tools like Evidently, Arize, or Fiddler.

Cost/Performance Comparison

The biggest reason startups and mid-market firms look at bare-metal GPU stacks is simple: cost efficiency without sacrificing performance. But the comparison isn’t always apples-to-apples.

💰 Cost Per GPU-Hour

Hyperscalers often charge $3–$4/hr for an A100 (on-demand), with premium networking and storage adding more.

Bare-metal GPU providers (VPS/cloud-native colocation) often offer the same A100s at $1.50–$2/hr — roughly half the cost.

For long-running training workloads, those differences add up to six- or seven-figure savings annually.

⚡ Performance Per GPU

Bare-metal eliminates virtualization overhead → more consistent throughput.

InfiniBand/RDMA networking enables better scaling efficiency for distributed training (higher GPU utilization as you scale up).

In inference, bare-metal delivers lower tail latency (P95/P99), which is critical for SLAs.

📊 The Real Trade-Off

Hyperscalers bundle ecosystem services: managed orchestration, autoscaling, monitoring, compliance.

Bare-metal strips away the managed layer — you save money, but you need in-house or partner expertise to manage the stack.

Case Examples: Bare-Metal in Action

🚀 Startup Training at Scale

A startup training GPT-like language models shifted from hyperscalers to a bare-metal provider. The result? 40% cost savings on GPU hours while improving scaling efficiency for distributed training. But the trade-off was clear: they needed to build in-house AI Ops talent to manage orchestration, drivers, and observability. For a lean team, the learning curve was steep — but the cost savings extended their runway by months.

🏢 Mid-Market Inference at the Edge

A mid-market SaaS firm running personalized recommendations adopted bare-metal GPUs in edge data centers closer to its customers. By eliminating hyperscaler latency overhead, they cut P99 inference latency from ~1.5s to under 400ms. This not only improved user experience but also boosted conversion rates, proving that latency isn’t just technical — it’s directly tied to revenue.

Together, these stories highlight the promise and pitfalls of bare-metal stacks: they can be transformative for cost and performance, but only if organizations invest in the right operational practices.

Strategic Implications

When to Go Bare-Metal

Long-running training workloads (transformers, diffusion models) where GPU hours dominate the bill.

Teams with strong DevOps/AI Ops capabilities who can manage orchestration, drivers, and observability in-house.

Use cases demanding predictable performance (no noisy neighbors, stable scaling).

When to Stick with Hyperscalers

Early-stage projects where credits cover the burn and speed-to-experiment is more valuable than cost efficiency.

Organizations that rely heavily on managed services (SageMaker, Vertex AI, Azure ML) for compliance, autoscaling, and monitoring.

Teams without dedicated ops resources who need “AI infrastructure as a service.”

Why Hybrid Often Wins

Training large jobs on bare-metal to capture cost savings, while bursting into the cloud for short-term spikes or exploratory experiments.

Running steady-state inference on bare-metal edge deployments, while keeping fallback capacity in the cloud for global coverage.

Hybrid allows firms to balance cost, scale, and agility — but only if they build a unified observability and scheduling layer across environments.

The takeaway: there’s no one-size-fits-all answer. The organizations that succeed will be those that treat GPUs like a portfolio — running workloads where they make the most economic and performance sense.

Closing: Where ParallelIQ Fits In

Bare-metal GPU stacks aren’t a silver bullet. They can slash costs and unlock performance, but only if you have the right monitoring, scheduling, and operational discipline in place. Otherwise, savings on paper turn into idle GPUs, brittle clusters, and frustrated teams.

That’s where ParallelIQ comes in. We help growth-stage startups:

Audit workloads to uncover hidden GPU waste.

Design hybrid strategies that balance bare-metal efficiency with cloud agility.

Build observability stacks that catch stalls, drift, and runaway costs before they hurt the business.

👉 The question isn’t whether bare-metal or cloud is “better.” The question is whether your organization can execute at scale without losing control. At ParallelIQ, we make sure the answer is yes.

[Schedule a call → here]

#AIInfrastructure #GPUs #BareMetal #CloudComputing #ParallelIQ