Cloud providers and Infrastructure

AI-Native vs. Cloud-Native: The Next Great Divide in Startup Infrastructure

Introduction: A Shift in the Startup DNA

Over the past decade, “cloud-native” became the defining playbook for modern startups. Containers, microservices, and CI/CD pipelines reshaped how software was built and deployed. Speed, elasticity, and reliability became the hallmarks of success.

But a new generation of startups is emerging — ones that don’t just run on the cloud, they think in models. For these companies, data is the code, GPUs are the compute fabric, and intelligence is the product. Welcome to the era of the AI-native startup.

While both cloud-native and AI-native companies share a commitment to scalability and automation, their operational realities could not be more different. Cloud-natives optimize for request throughput and uptime. AI-natives optimize for model accuracy, training cost, and explainability. Cloud-natives manage apps; AI-natives manage learning systems.

This shift isn’t just technical — it’s structural. The compliance, observability, and infrastructure principles that served the cloud generation no longer suffice in an AI-driven world. As regulatory and ethical expectations rise, startups must learn to treat AI governance with the same rigor cloud-natives once applied to DevOps.

The companies that master this transition — blending cloud reliability with AI accountability — will define the next decade of software.

What It Means to Be Cloud-Native

Being cloud-native means more than simply running workloads in AWS or GCP. It’s about designing software that’s born in the cloud — architected for elasticity, automation, and resilience from day one.

Cloud-native companies build their products as distributed systems of services, not monoliths. They rely on containers, Kubernetes, microservices, CI/CD pipelines, and managed databases to move fast, scale effortlessly, and recover automatically. Every component — from deployment to monitoring — is programmable and version-controlled.

For the past decade, this model has powered the rise of SaaS giants and digital platforms. It allowed small teams to achieve enterprise-grade uptime and release velocity. Cloud-native thinking turned infrastructure into code, and DevOps into culture.

At its core, the cloud-native philosophy can be summarized as:

“Ship quickly, recover automatically, and scale without friction.”

Compliance and security followed suit. Frameworks like SOC 2, ISO 27001, and GDPR became the standard badges of maturity, ensuring reliability and customer trust. But these frameworks were designed for software delivery, not model delivery.

And that’s where the next paradigm begins to diverge — because AI-native companies don’t just deliver code; they deliver learning behavior. Their infrastructure, risks, and compliance obligations extend far beyond what cloud-native playbooks ever had to consider.

The Rise of AI-Native Companies

If cloud-native was about shipping software faster, AI-native is about learning faster.

AI-native companies are not just deploying code — they’re deploying intelligence. Their core assets aren’t APIs and front-end services but data pipelines, model weights, and GPU cycles. They don’t manage monoliths or microservices; they orchestrate experiments, retraining loops, and inference pipelines.

In an AI-native startup, engineering and research converge. The product itself learns, adapts, and sometimes misbehaves. That means observability, governance, and compliance must now cover not only uptime and latency, but also data provenance, model drift, and bias detection.

These companies live at the intersection of three volatile elements:

Compute — GPUs that must be efficiently scheduled, shared, and monitored to avoid runaway cost.

Data — constantly evolving, high-volume, and often containing sensitive or regulated information.

Models — dynamic entities that must be versioned, explainable, and reproducible.

To manage this complexity, AI-natives build on a different stack entirely:

GPU infrastructure from specialized providers like CoreWeave, Lambda, or Crusoe.

Distributed training frameworks such as Ray, PyTorch DDP, or Kubeflow.

Experiment tracking and model governance via MLflow, Weights & Biases, or in-house tools.

Inference orchestration that scales models on demand while maintaining latency guarantees.

Their measure of success isn’t deployment velocity — it’s the cost per accurate prediction and time to insight.

In the AI-native world, every improvement in infrastructure translates directly into model intelligence, not just developer productivity.

And yet, this power comes with risk. Because models are trained, not programmed, their behavior depends on how well data, training environments, and observability are managed. That’s why compliance — once a checkbox exercise for SaaS — has become an existential concern for AI-native companies.

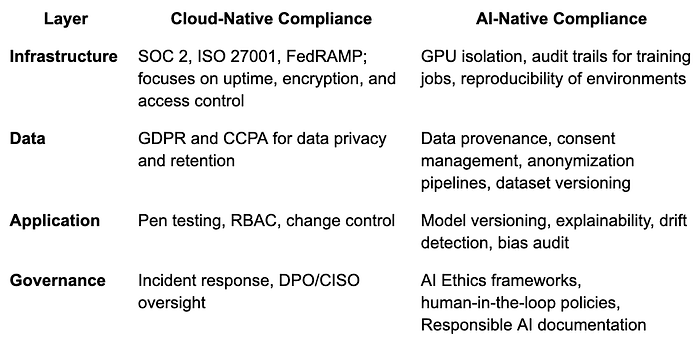

The Compliance Divide

Compliance used to mean checking boxes: encrypt data, log access, run annual audits, and publish a SOC 2 report. That framework worked well for cloud-native companies whose primary risks were data breaches, downtime, and privacy violations.

But AI-native companies live in a different reality. Their systems don’t just store data — they learn from it, generate new outputs, and influence decisions. As a result, the compliance conversation is shifting from security to accountability.

Let’s look at how the two worlds diverge:

Press enter or click to view image in full size

For cloud-natives, compliance is about protecting what they build. For AI-natives, it’s about proving what they learned — that the data was obtained legally, the model was trained responsibly, and the results can be explained or reversed if needed.

Regulations are evolving quickly to reflect this new world:

EU AI Act introduces risk tiers for AI systems, demanding documentation of training data and model transparency.

NIST AI Risk Management Framework outlines principles of accountability and explainability.

ISO/IEC 42001 (AI Management System) now complements ISO 27001 to formalize Responsible AI operations.

In short, AI compliance starts where cloud compliance ends — extending the same rigor that once applied to infrastructure into the entire lifecycle of data and models.

The cloud era asked, “Is your system secure?”

The AI era asks, “Is your system trustworthy?”

And that distinction is where many startups are getting caught off guard. As AI-native companies scale, compliance isn’t just about passing audits — it’s about preserving credibility with customers, investors, and regulators.

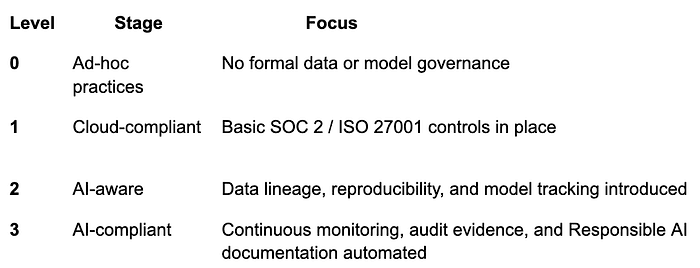

Why This Matters for Startups

For early-stage startups, compliance often feels like bureaucracy — a problem to solve “later.” But for AI-native startups, “later” comes fast.

As soon as they start selling to enterprise customers or regulated industries, they encounter the same roadblock:

“Can you show us your data-handling policies, audit trails, and model governance framework?”

Without clear answers, deals stall. And for investors, that gap signals operational immaturity — a sign that the startup’s growth engine might not be ready to scale responsibly.

Beyond sales friction, there are deeper risks:

Legal exposure: Using unvetted datasets or unlicensed models can trigger IP or privacy violations.

Reputational damage: Model bias, hallucinations, or privacy breaches can undermine trust before product-market fit is even achieved.

Runway compression: Re-engineering infrastructure to become compliant later is expensive — especially when GPU utilization and data movement are already costly.

The new reality is that compliance is shifting left — just like DevOps once did. Startups that integrate governance early can iterate faster, close enterprise deals sooner, and scale with fewer surprises.

This creates a new maturity curve for AI companies:

Press enter or click to view image in full size

Investors and enterprise buyers are already using this kind of framework — consciously or not — to judge who’s ready for partnership. And as regulations tighten, compliance maturity will become as critical a growth metric as ARR or burn rate.

For AI startups, compliance isn’t just a cost center — it’s a market enabler.

It’s the difference between being technically impressive and being commercially viable.

How Cloud-Natives Can Evolve — and How ParallelIQ Helps

Cloud-native companies already mastered something AI-natives often struggle with: operational discipline. They have CI/CD pipelines, observability, access control, and cost management baked into their DNA. But their systems were built for stateless web apps — not for training clusters that consume GPUs by the hour and evolve continuously.

Conversely, AI-native startups move fast with experiments, iterate on data, and deploy new models weekly — yet they often lack the guardrails that keep infrastructure reliable and auditable.

That’s where ParallelIQ fits in.

We help bridge the operational gap between the cloud generation and the AI generation — turning best practices from DevOps into a foundation for MLOps, observability, and compliance-by-design.

Here’s how:

GPU Observability & Cost Efficiency

▸ We measure actual GPU utilization against theoretical peaks and cloud billing to expose idle capacity and overspend.

▸ We measure actual GPU utilization against theoretical peaks and cloud billing to expose idle capacity and overspend.

▸ Result: up to 40% lower GPU cost without reducing performance.Data Lineage & Model Traceability

▸ We instrument data and model pipelines so that every model can be traced back to its dataset, environment, and training run.

▸ Result: audit-ready infrastructure and faster debugging for model drift.Compliance Readiness & Continuous Evidence Collection

▸ We align your infrastructure telemetry with emerging AI frameworks (SOC 2, ISO 42001, EU AI Act, NIST AI RMF).

▸ Result: automatic compliance evidence instead of manual spreadsheet audits.Governance Automation

▸ Policy enforcement, GPU-quota management, and model-deployment guardrails — integrated directly into your runtime.

▸ Result: governance that scales with your experiments, not against them.

Our goal isn’t to slow teams down — it’s to make them faster by being safer. When governance, observability, and cost tracking are part of the runtime, engineers can focus on innovation while leadership gains confidence in scalability and compliance.

At ParallelIQ, we help AI-natives become enterprise-ready — and cloud-natives evolve into true AI infrastructure companies.

Closing: The New Era of Infrastructure Maturity

The last decade was defined by companies that mastered the cloud-native revolution — they turned infrastructure into code, automated everything, and scaled faster than ever before. But the next decade belongs to those who master the AI-native era — where infrastructure doesn’t just serve software, it serves intelligence.

In this new landscape, the line between infrastructure, data, and compliance has blurred. GPU clusters are now as strategic as databases once were. Compliance isn’t a checkbox; it’s a trust architecture — determining which startups can partner with enterprises, handle regulated data, and sustain investor confidence.

The winners will be those who combine the discipline of the cloud-native era with the accountability of the AI-native age — operational excellence fused with ethical intelligence.

▸ Cloud-native gave startups speed.

▸ AI-native demands wisdom.

At ParallelIQ, we help founders and engineering leaders navigate that transition. From GPU observability and data lineage to continuous compliance readiness, we close the gap between innovation and control — so your infrastructure is not just powerful, but provable.

→ Learn how ParallelIQ’s 42-point infrastructure inspection helps AI startups stay efficient, compliant, and enterprise-ready -> here

#AIInfrastructure #Compliance #AInative #Cloudnative #ParallelIQ #GPUObservability